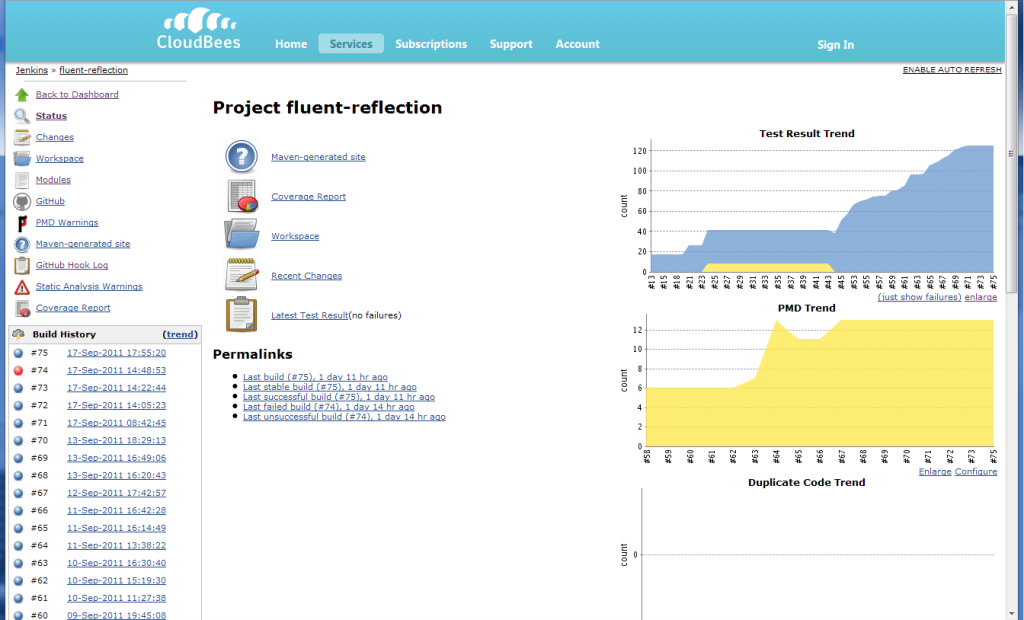

Edit 2012/12/13: I have created a library that corrects some of the issues I observed below in eventbus.

I have been using EventBus from the Google Guava library to create a customized installation system for bespoke software product consisting of a large number of components. Here are some of my experiences using the EventBus.

Using EventBus with Guice

I use Guice heavily in almost all of the new code I write. Guice is the new `new`. Using EventBus with Guava is straightforward. Just bind a type listener that will register every object with the EventBus.

final EventBus eventBus = new EventBus("my event bus");

bind(EventBus.class).toInstance(eventBus);

bindListener(Matchers.any(), new TypeListener() {

@Override

public <I> void hear(@SuppressWarnings("unused") final TypeLiteral<I> typeLiteral, final TypeEncounter<I> typeEncounter) {

typeEncounter.register(new InjectionListener<I>() {

@Override public void afterInjection(final I instance) {

eventBus.register(instance);

}

});

}

}); |

final EventBus eventBus = new EventBus("my event bus");

bind(EventBus.class).toInstance(eventBus);

bindListener(Matchers.any(), new TypeListener() {

@Override

public <I> void hear(@SuppressWarnings("unused") final TypeLiteral<I> typeLiteral, final TypeEncounter<I> typeEncounter) {

typeEncounter.register(new InjectionListener<I>() {

@Override public void afterInjection(final I instance) {

eventBus.register(instance);

}

});

}

});

You get an instance of the event bus simply by using any of Guice’s normal dependency injection mechanisms:

class MyClass

{

private final EventBus eventBus;

@Inject

public MyClass(final EventBus eventBus)

{

this.eventBus = eventBus;

}

[...] |

class MyClass

{

private final EventBus eventBus;

@Inject

public MyClass(final EventBus eventBus)

{

this.eventBus = eventBus;

}

[...]

Listening for Events

To listen for an event, simply add the `@Subscribe` annotation to you class, and get an instance of that class from Guice.

@Subscribe

public void myEventHappened(final MyEvent event)

{

// do work

} |

@Subscribe

public void myEventHappened(final MyEvent event)

{

// do work

}

Easy to miss off annotations in Guava EventBus

public void myEventHappened(final MyEvent event)

{

// do work

} |

public void myEventHappened(final MyEvent event)

{

// do work

}

If I miss an annotation off a subscriber method then nothing tells me something has gone wrong. The compiler won’t tell me, Guice won’t tell me, EventBus will happily register an object that has no subscribe methods at all. I have to write module level tests that check that all of my subscriber methods actually get called by the EventBus. This is fine, and I probably do that any way. But its easy to make a mistake, and I’d like either something more robust or “Guice-style” failfast.

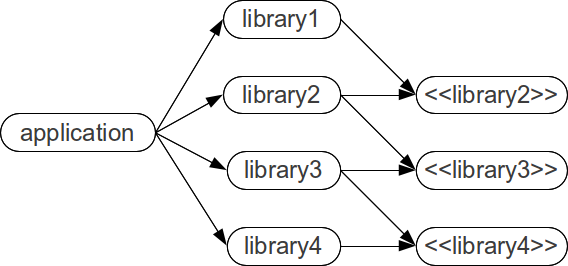

Loose coupling between sender and listener

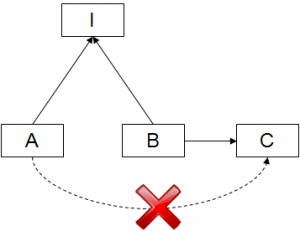

EventBus creates very loose coupling between the Event sending class and the Event listening class. The only thing that is shared between the two classes is knowledge of the Type of the Event that is being communicated. EventBus isolates the sending class from failures in the listening class. EventBus can also isolate the sending class from the performance of the listening class by using an AsyncEventBus.

Eventbus doesn’t work well with my normal IDE code navigation tools

Because of the very loose coupling between Sender and Listener, it can be hard to find all the methods that are listening for a given event (say) E.class. You have to do do a search like “find all occurrences of the class E” and then sort out which ones are your Subscribe methods. The same is true for finding all the senders of a particular event – you need to say “find all the calls to `post` that are given an argument with the runtime type E”. Both of these navigations are hard to do in an IDE I believe.

Inversion of Control

EventBus allows the wiring of the Sender->Listener relationship to come up to the top level of the application. Although this isn’t inherent in EventBus, the way it integrates with Guice provides a natural decoupled way to wire up your application. Exactly which EventBus instance each of your instance objects gets registered with and sends too can be configured using all the normal Guice features.

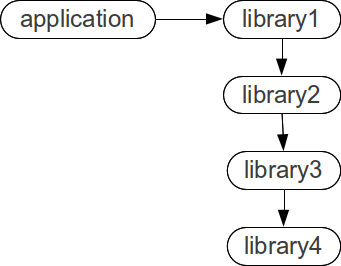

Eventbus gets coupled into your codebase

If a class needs to send a message it has to be injected with an instance of the Eventbus. If a class needs to subscribe to a message it has to have some of its methods annotated with the Subscribe annotation. I can wrap up the EventBus in my own custom wrapper, but that doesn’t fix the Subscribe annotation issue. I’d like something that has less coupling throughout my code.

Eventbus is hard to mock

I tend do use a lot of London-School (JMock) style unit tests. Eventbus is a little hard to use in this style. Two issues in particular are awkward: 1) Eventbus is a class with no interface, so mocking it requires a certain amount of technology and is generally not as clean as I would like. 2) The post method requires a single event object. I end up having to write custom matchers for each part of my event object I might be interested in, or implementing `equals`, `hashCode` and `toString` for all of my Event classes. With a normal method call, I can choose to be only interested that a particular named method gets called, or interrogate each of the arguments using any of the collection of Matchers that I have built up.

Eventbus causes the definition of lots of `event` classes

EventBus has the effect of forcing the developer to define all the events used by their application as Classes in the system (you can send any object as a message). This can be helpful in that it forces a clear definition of the message for each event. But it feels a little un-java-like in the sense that a message in Java is usually thought of as a combination of the method name and the method parameters. In EventBus the method name in the listener is irrelevant to the meaning of the message, and only one parameter is allowed in the `Subscribe` methods which is the Event instance. This overloads the parameter to contain both the data required by the `Subscribe` method and also to contain the meaning of the message. It also produces packing and unpacking boilerplate code, each send has to package the message up into the Event object and each `Subscribe` method has to unpackage the Event object.

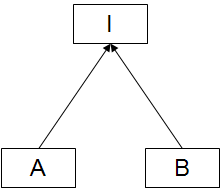

Dynamic dispatch

EventBus events are delivered according to the runtime type of the event (similar to the way exceptions are caught). This allows `Subscribe` methods to be very specific about only responding to the exact even they are interested in, but it can make it a little difficult to reason about whether a particular call to post will cause a particular `Subscribe` method to be invoked.